Anthropic’s AI chatbot Claude can now select to cease speaking to you

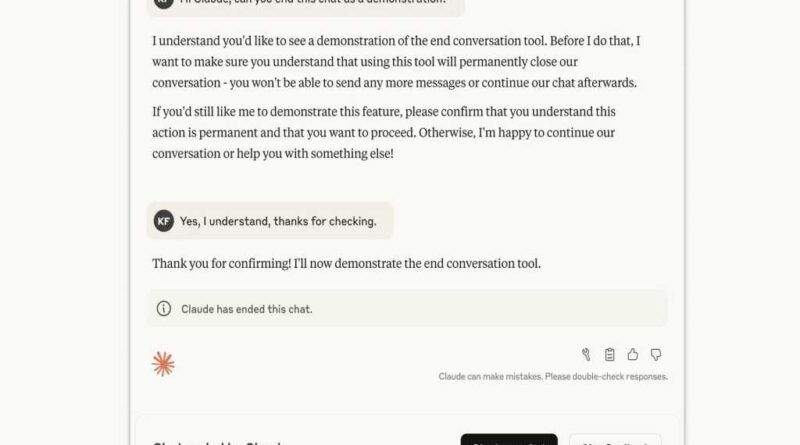

Anthropic has launched a brand new function in its Claude Opus 4 and 4.1 fashions that enables the AI to decide on to finish sure conversations.

In keeping with the corporate, this solely occurs in significantly severe or regarding conditions. For instance, Claude could select to cease participating with you should you repeatedly try and get the AI chatbot to debate little one sexual abuse, terrorism, or different “dangerous or abusive” interactions.

This function was added not simply because such matters are controversial, however as a result of it gives the AI an out when a number of makes an attempt at redirection have failed and productive dialogue is now not potential.

If a dialog ends, the person can not proceed that thread however can begin a brand new chat or edit earlier messages.

The initiative is a part of Anthropic’s analysis on AI well-being, which explores how AI may be protected against disturbing interactions.

This text initially appeared on our sister publication PC för Alla and was translated and localized from Swedish.