NVIDIA RTX 5090 outperforms AMD and Apple working native OpenAI language fashions

Builders and creatives searching for higher management and privateness with their AI are more and more turning to domestically run fashions like OpenAI’s new gpt-oss household of fashions, that are each light-weight and extremely useful on end-user {hardware}. Certainly, you’ll be able to have it run on client GPUs with simply 16GB of reminiscence. That makes it attainable to make use of a variety of {hardware} – with NVIDIA GPUs rising as the easiest way to run these types of open-weight fashions.

Whereas nations and firms rush to develop their very own bespoke AI options to a variety of duties, open supply and open-weight fashions like OpenAI’s new gpt-oss-20b are discovering way more adoption. This newest launch is roughly similar to the GPT-4o mini mannequin which proved so profitable over the previous yr. It additionally introduces chain of thought reasoning to deeply suppose by way of issues, adjustable reasoning ranges to regulate pondering capabilities on-the-fly, expanded context size, and effectivity tweaks to assist it run on native {hardware}, like NVIDIA’s GeForce RTX 50 Collection GPUs.

However you’ll need the precise graphics card if you wish to get the most effective efficiency. NVIDIA’s GeForce RTX 5090 is its flagship card that’s super-fast for gaming and a variety {of professional} workloads. With its Blackwell structure, tens of hundreds of CUDA cores, and 32GB of reminiscence, it’s an incredible match for working native AI.

Llama.cpp is an open-source framework that permits you to run LLMs (giant language fashions) with nice efficiency particularly on RTX GPUs because of optimizations made in collaboration with NVIDIA. Llama.cpp provides plenty of flexibility to regulate quantization methods and CPU offloading.

Llama.cpp has revealed their very own checks of gpt-oss-20b, the place the GeForce RTX 5090 topped the charts at a formidable 282 tok/s. That’s compared to the Mac M3 Extremely (116 tok/s) and AMD’s 7900 XTX (102 tok/s). The GeForce RTX 5090 consists of built-in Tensor Cores designed to speed up AI duties maximizing efficiency working gpt-oss-20b domestically.

Observe: Tok/s, or tokens per second, measures tokens, a piece of textual content that the mannequin reads or outputs in a single step, and the way shortly they are often processed.

NVIDIA

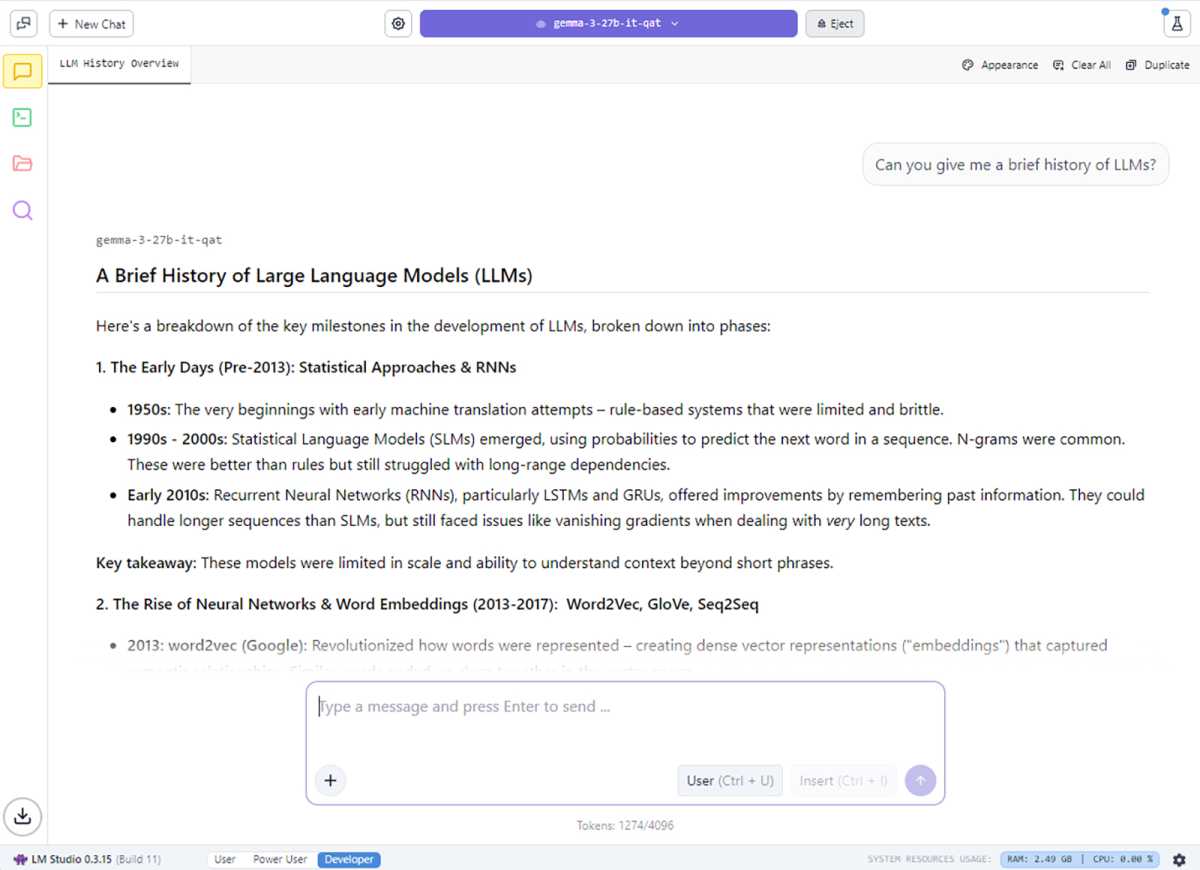

For AI fanatics that simply wish to use native LLMs with these NVIDIA optimizations, contemplate the LM Studio utility, constructed on prime of Llama.cpp. LM Studio provides help for RAG (retrieval-augmented technology) and is designed to make working and experimenting with giant LLMs simple—while not having to wrestle with command-line instruments or deep technical setup.

NVIDIA

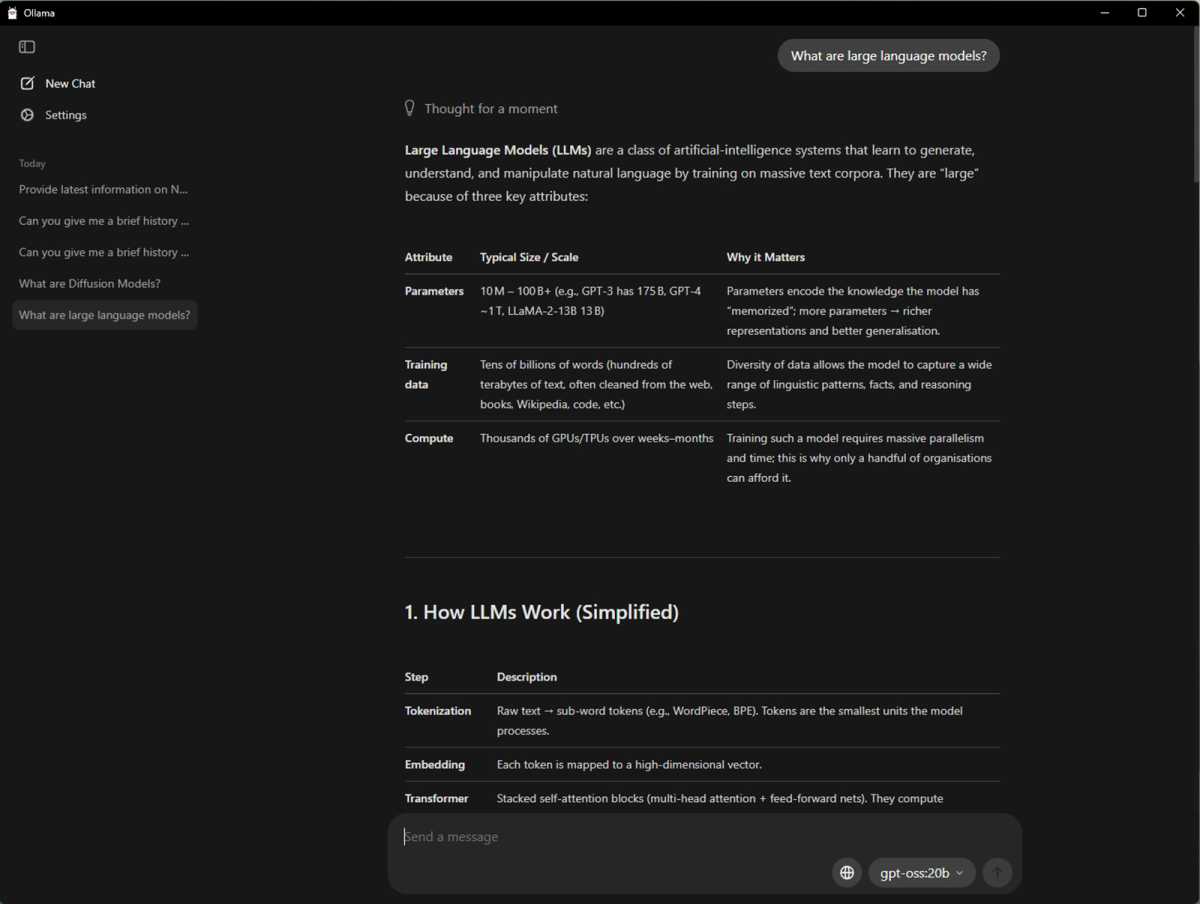

One other standard open supply framework for AI testing and experimentation is Ollama. It’s nice for attempting out completely different AI fashions, together with the OpenAI gpt-oss fashions, and NVIDIA labored carefully to optimize efficiency, so that you’ll get nice outcomes working it on an NVIDIA GeForce RTX 50 Collection GPU. It handles mannequin downloads, setting setup and GPU acceleration mechanically, in addition to built-in mannequin administration to help a number of fashions concurrently, integrating simply with functions and native workflows.

Ollama additionally provides a straightforward manner for finish customers to check the most recent gpt-oss mannequin. And in an identical solution to llama.cpp, different functions additionally make use of Ollama to run LLMs. One such instance is AnythingLLM with its simple, native interface making it wonderful for these simply getting began with LLM benchmarking.

NVIDIA

You probably have one of many newest NVIDIA GPUs (and even if you happen to don’t, however don’t thoughts the efficiency hit), you’ll be able to check out gpt-oss-20b your self on a variety of platforms. LM Studio is nice if you would like a slick, intuitive interface that permits you to seize any mannequin you wish to check out and it really works on Home windows, macOS, and Linux equally properly.

AnythingLLM is one other easy-to-use choice for working gpt-oss-20b and it really works on each Home windows x64 and Home windows on ARM. There’s additionally Ollama, which isn’t as slick to have a look at, but it surely’s nice if you understand what you’re doing and wish to get setup shortly.

Whichever utility you utilize to mess around with gpt-oss-20b, although, the most recent NVIDIA Blackwell GPUs appear to supply the most effective efficiency.